Comparia

Open Source LLM Arena

Collect human preference datasets for less-resourced languages and specific sectors,

while raising awareness about model diversity, bias, and environmental impact.

Built by the French government, now growing into new languages and sectors.

🇫🇷 French platform · 🇩🇰 Danish platform

How does it work?

flowchart LR

U["👤 Ask"] --> A["🤖 Compare"] --> V["🗳️ Vote"] --> R["🔍 Reveal"]

R --> L["🏆 Leaderboard"]

R --> T["🧠 Rare data for model training"]

R --> M["🗺️ Use case mapping"]

R --> E1["💡 Model diversity"]

R --> E2["⚖️ Bias awareness"]

R --> E3["🌱 Env. impact"]

style U fill:#f0f4ff,stroke:#3558a2

style A fill:#f0f4ff,stroke:#3558a2

style V fill:#f0f4ff,stroke:#3558a2

style R fill:#f0f4ff,stroke:#3558a2

style E1 fill:#e8f5e9,stroke:#388e3c

style E2 fill:#e8f5e9,stroke:#388e3c

style E3 fill:#e8f5e9,stroke:#388e3c

style L fill:#fff3e0,stroke:#e65100

style T fill:#fff3e0,stroke:#e65100

style M fill:#fff3e0,stroke:#e65100

🟦 User journey 🟩 Awareness value 🟧 Dataset value

🇫🇷 The French use case

|

Launched in October 2024 by DINUM and the French Ministry of Culture to address the lack of French-language preference data for LLM training nd evaluation. Since launch: 600,000+ prompts, 250,000+ preference votes, 300,000+ visitors. One of the largest non-English human preference datasets available. All data published openly on Hugging Face:

We published a pre-print to dive deep into the project's strategy in France. |

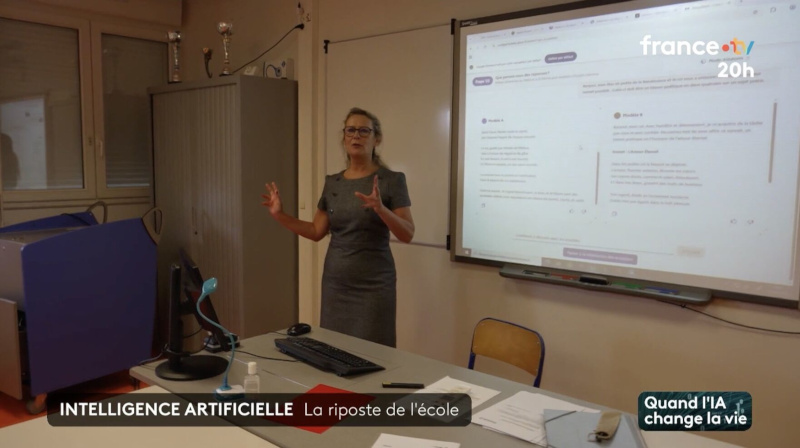

Compar:IA on the France 2 evening news, used in the classroom to teach students about AI models, bias, and environmental impact. |

For whom?

🌍 LanguagesMost LLMs underperform outside English. Compar:IA collects the preference data needed to close this gap. Already live in French and Danish, and planning launches in Sweden, Estonia and Lithuania. |

🏛️ SectorsGeneric benchmarks miss domain-specific needs. A sector arena reveals which models handle specialised language best. Healthcare, legal, education, public admin, agriculture... |

🏢 OrganisationsRun your own arena, evaluate models on your real-world tasks, and contribute data back to the commons. Governments, universities, hospitals, companies, NGOs... |

Benefits

💡 Raise awarenessTeach citizens and professionals about model diversity, bias, and environmental cost. Already used in schools and training sessions.

|

📊 Generate rare datasetsProduce instruction and preference data in less-ressourced languages.

|

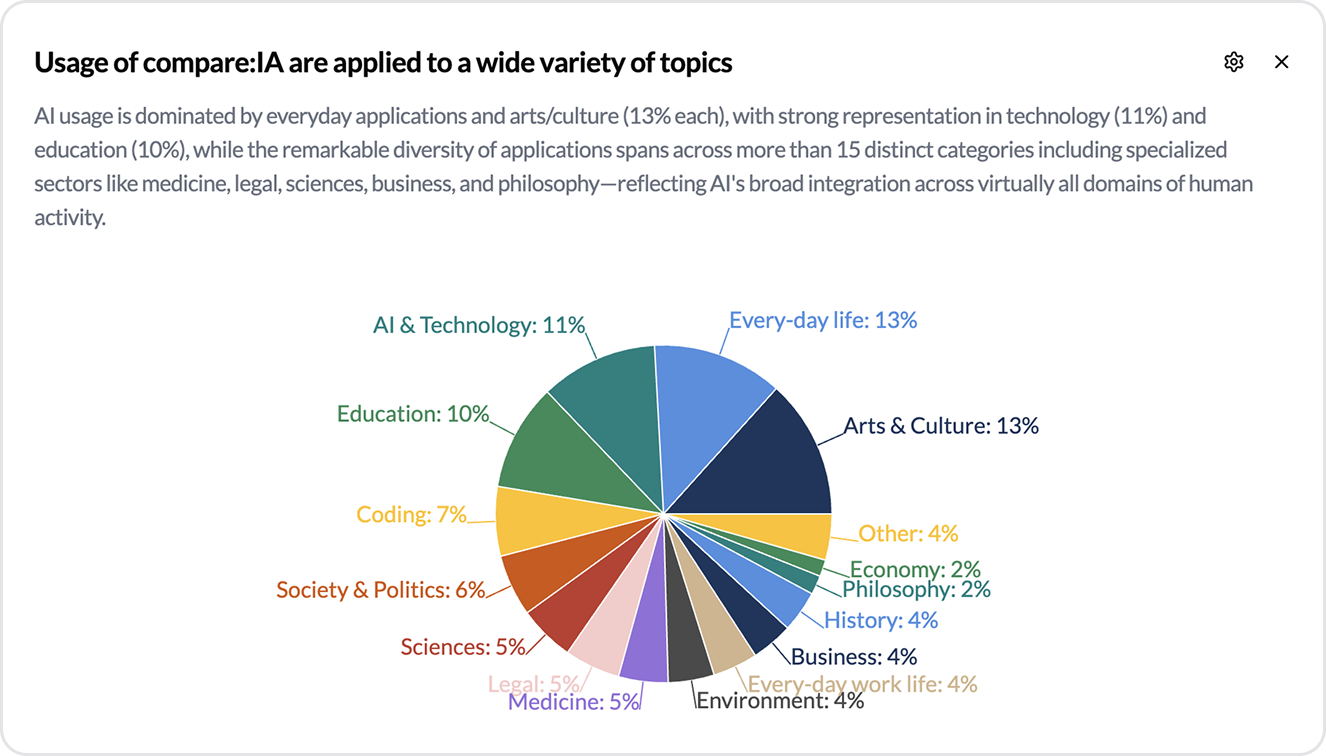

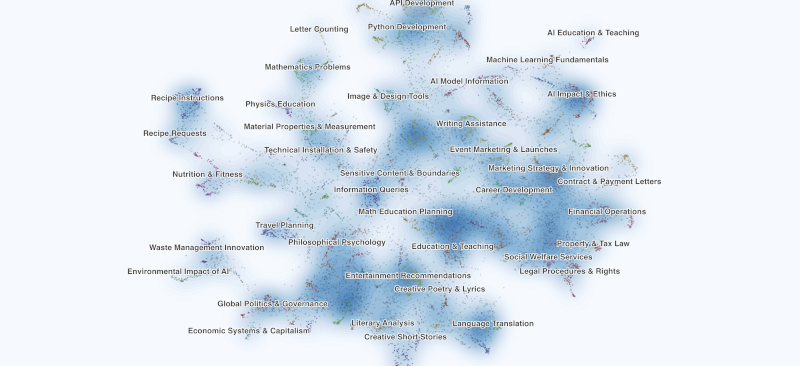

🔁 Downstream reuseData feeds into new model training, leaderboards, use case mappings, and other research topics.

|

Interested in an arena for your language, sector, or organisation?

The platform is fully open source, self-hostable, and customizable: choose your models, translate the interface, adapt prompt suggestions, add your logo. We can host it for you or help you set it up yourself.

Whatever your situation, reach out first and we'll figure out the best path together.

Contribute, we need you 🤝

Compar:IA is a digital common. Whether you can offer funding, code, translations, or simply ideas, there is a place for you.

💰 Financially. Compar:IA has been funded by DINUM and the French Ministry of Culture, with European support from ALT-EDIC. We are actively looking for new partners and funders to sustain the infrastructure, expand to new languages, and keep the project independent. [email protected]

💻 In code. The entire platform is open source and we welcome contributions of all sizes: bug fixes, new features, translations, documentation. Come build with us. GitHub repository

💬 In discussions. Share your ideas, flag issues, or just ask questions on GitHub Discussions. We want to hear from you. GitHub Discussions

Any other way. Partnerships, academic collaborations, media coverage, spreading the word: every contribution matters. Reach out and let's talk. Contact us

Roadmap

🟢 In Progress

- EcoLogits update #253 (🇪🇺 ALT-EDIC, 🇫🇷 DINUM)

- Gradio → FastAPI migration (🇫🇷 Ministry of Culture, 🇫🇷 DINUM, 🇪🇺 ALT-EDIC)

- Language/platform-specific model support (🇪🇺 ALT-EDIC, 🇫🇷 DINUM)

- Dataset publication pipeline configurable per language/platform, with customizable publication delays and anonymization pipelines (🇪🇺 ALT-EDIC, 🇫🇷 DINUM)

🔮 Up Next

- Web search and document upload

- Authentication

- Style control #273

- Ranking consolidation and internationalization

- Message history

- Easier deployment and streamlined onboarding

- Improved anonymization pipeline

- Live use-case mapping

⛵ Shipped

- Dataset publishing pipeline v1 (🇫🇷 DINUM, 🇫🇷 Ministry of Culture)

- Leaderboard v1 (🇫🇷 DINUM, 🇫🇷 Ministry of Culture, in collaboration with 🇫🇷 PEReN)

- Archived models (🇫🇷 DINUM, 🇫🇷 Ministry of Culture)

- Blog section (🇫🇷 DINUM, 🇫🇷 Ministry of Culture)

- Internationalization foundations (🇫🇷 DINUM, 🇫🇷 Ministry of Culture)

- compar:IA v1 (🇫🇷 DINUM, 🇫🇷 Ministry of Culture)

👉 Full technical roadmap on GitHub

Getting started

The platform is fully open source and self-hostable. The quickest way to get running:

cp .env.example .env # Configure environment

make install # Install all dependencies

make dev # Start backend + frontend

For the full setup guide (Docker, manual setup, testing, database, models, i18n, architecture), see CONTRIBUTING.md.