Oxide Lab

Private, powerful and easy-to-use AI chat right on your computer

📚 Table of Contents

- What is this?

- Who is this app for?

- Key Features

- Recognition

- Installation & Setup

- How to Start Using

- Interface Features

- Privacy and Security

- Tips and Recommendations

- System Requirements and Limitations

- Support the Project

- Acknowledgments

✨ What is this?

Oxide Lab is a modern desktop application for communicating with AI models that runs completely locally on your computer. No subscriptions, no data sent to the internet — just you and your personal AI assistant.

🎯 Who is this app for?

- AI enthusiasts — want to experiment with models locally

- Privacy matters — your data stays only with you

- Researchers — need control over generation parameters

- Creative minds — use AI for writing, brainstorming and inspiration

🚀 Key Features

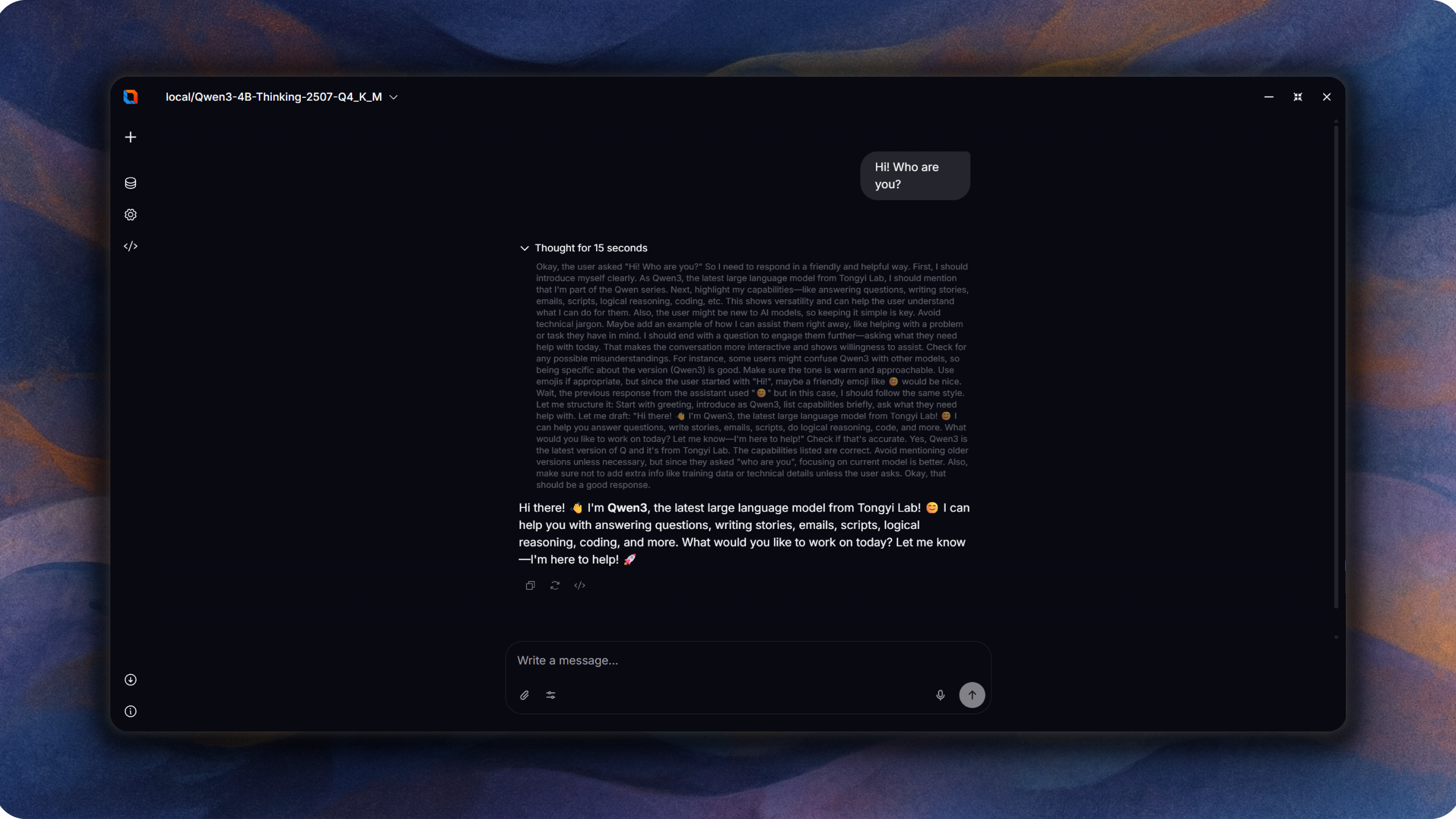

💬 Smart Chat Interface

- Modern and intuitive design

- Real-time streaming responses

- Support for text and code formatting

- Voice input powered by local Whisper

- Voice input meter with language selection

🧠 Thinking Mode

- Enable the "Thinking" feature and watch AI think

- See the analysis process before the final answer

- Higher quality and thoughtful solutions to complex tasks

⚙️ Flexible Settings

- Temperature — control response creativity

- Top-K, Top-P, Min-P — fine-tune generation style

- Repeat Penalty — avoid repetitions

- Context Length — depends on model and device resources

🔧 Easy Setup

- Support for local Qwen3 models in GGUF format (other models — in plans)

- Intelligent memory management

🎖️ Recognition

Oxide Lab has been recognized by the community for its quality and innovation:

- 🏆 Featured in Awesome Tauri — curated list of quality Tauri applications

- 🏆 Featured in Awesome Svelte — curated list of quality Svelte projects

🛠️ Installation & Setup

Prerequisites

Before installing Oxide Lab, ensure you have the following installed:

For GPU Acceleration (Optional but Recommended)

- CUDA 12.0+ for NVIDIA GPUs (Windows/Linux)

Installation Steps

Clone the repository:

git clone https://github.com/FerrisMind/Oxide-Lab.git cd Oxide-LabInstall dependencies:

npm installRun in development mode:

# For CPU-only mode npm run tauri:dev:cpu # For CUDA GPU mode (if CUDA is available) npm run tauri:dev:cudaBuild for production:

# CPU-only build npm run tauri:build:cpu # CUDA build npm run tauri:build:cuda

System Requirements

- OS: Windows 10/11, Linux, macOS

- RAM: Minimum 4GB, Recommended 8GB+

- Storage: 500MB for application + model size

- GPU: Optional, but recommended for better performance

Troubleshooting

- If you encounter build issues, ensure Rust and Node.js are properly installed

- For GPU support, verify CUDA installation

- Check the Issues page for common problems

📖 How to Start Using

1️⃣ Get the Model

Download a model in .gguf format and tokenizer.json file:

- Recommended models: Qwen3 4B (and other Qwen3 variants in GGUF)

- Where to download: Hugging Face, official model repositories

2️⃣ Load into Application

- Open Oxide Lab

- Click "Select Model File" and specify path to

.gguffile - Optionally configure inference parameters

- Click "Load"

3️⃣ Start Chatting

- Enter your question or request

- Enable "Thinking" for deeper responses

- Adjust generation parameters to your taste

- Enjoy conversation with your personal AI!

🎨 Interface Features

📊 Informative Indicators

- Model loading progress with detailed stages

- Generation status indicators

- Visual display of AI thinking

⚡ Quick Actions

- Cancel model loading with one click

- Stop generation at any moment

- Quick parameter changes without reloading

🛡️ Privacy and Security

🔒 100% Local

- All computations happen on your computer

- No external requests or data sending

- Full control over your information

💾 Data Management

- Conversations stored only in application session

- Models remain on your disk

- No hidden data collection

💡 Tips and Recommendations

🎯 For best results:

- Use thinking mode for complex tasks

- The app already has the best settings built-in based on Qwen3 model manufacturer recommendations. Just enable and use!

- The app also supports changing default settings. Experiment with temperature: 0.7-1.0 for creativity, 0.1-0.3 for accuracy

- Increase context for working with long documents

⚡ Performance optimization:

- Supports CPU and GPU (CUDA)

🎨 Creative usage:

- Enable thinking for text analysis and problem solving

- Experiment with high temperature for creative writing

- Use long context for working with large documents

🖥️ System Requirements and Limitations

Supported Platforms

- Windows 10/11 — full support

- Linux and macOS — in planning stage (not yet supported)

Models

- Supported: Qwen3 in GGUF format (mono-architecture)

- Important: compatibility with other models is not yet guaranteed

Minimum Hardware Requirements

The smallest Qwen3 models (0.6B and 1.7B) work with acceptable speed and quality even on devices with 2-core CPU and 4 GB RAM. The 4B model also works in this application with such devices, but the performance is many times lower and requires more memory, which is difficult to achieve, for example, with LM Studio without significant quality loss.

Context and Performance

- Effective context length depends on: selected model, available RAM

- Practically achievable context length may be lower than theoretically declared by the model

- The larger the context, the higher the memory requirements and lower the generation speed

🌟 Support the Project

If Oxide Lab has been useful to you:

- ⭐ Star the project

- 🐛 Report bugs

- 💡 Suggest new features

- 🤝 Share with friends

🙏 Acknowledgments

Oxide Lab is built with the help of amazing open-source technologies:

- Rust - Systems programming language that guarantees memory safety and performance

- Tauri - Framework for building fast and secure desktop applications

- Candle - Minimalist ML framework for Rust

- Phosphor Icons - Beautiful and consistent icon set

Made with ❤️ for the AI enthusiast community

Freedom, privacy and control over artificial intelligence